How to Run AI Models Locally: A Beginner's Guide

DeepSeek R1 is one of the best open-source models in the market right now, and the best part is that we can run different versions of it on our laptop. This guide will show you how to run open-source AI models like DeepSeek, Llama, or Mistral locally on your computer, regardless of your background.

Quick steps:

- Download Jan (opens in a new tab)

- Pick a recommended model

- Start chatting

Read Quickstart (opens in a new tab) to get started. For more details, keep reading.

Jan is for running AI models locally. Download Jan (opens in a new tab)

Jan is for running AI models locally. Download Jan (opens in a new tab)

Benefits of running AI locally:

- Privacy: Your data stays on your computer

- No internet needed: Use AI even offline

- No limits: Chat as much as you want

- Full control: Choose which AI models to use

How to run AI models locally as a beginner

Jan (opens in a new tab) makes it straightforward to run AI models. Download Jan and you're ready to go - the setup process is streamlined and automated.

What you can do with Jan:

- Download AI models with one click

- Everything is set up automatically

- Find models that work on your computer

Understanding Local AI models

Think of AI models like engines powering applications - some are compact and efficient, while others are more powerful but require more resources. Let's understand two important terms you'll see often: parameters and quantization.

What's a "Parameter"?

When looking at AI models, you'll see names like "Llama-2-7B" or "Mistral-7B". Here's what that means:

Model sizes: Bigger models = Better results + More resources

Model sizes: Bigger models = Better results + More resources

- The "B" means "billion parameters" (like brain cells)

- More parameters = smarter AI but needs a faster computer

- Fewer parameters = simpler AI but works on most computers

Which size to choose?

- 7B models: Best for most people - works on most computers

- 13B models: Smarter but needs a good graphics card

- 70B models: Very smart but needs a powerful computer

What's Quantization?

Quantization is a technique that optimizes AI models to run efficiently on your computer. Think of it like an engine tuning process that balances performance with resource usage:

Quantization: Balance between size and quality

Quantization: Balance between size and quality

Simple guide:

- Q4: Most efficient choice - good balance of speed and quality

- Q6: Enhanced quality with moderate resource usage

- Q8: Highest quality but requires more computational power

Understanding model versions:

- Original models: Full-sized versions with maximum capability (e.g., original DeepSeek)

- Distilled models: Optimized versions that maintain good performance while using fewer resources

- When you see names like "Qwen" or "Llama", these refer to different model architectures and training approaches

Example: A 7B model with Q4 quantization provides an excellent balance for most users.

Hardware Requirements

Before downloading an AI model, let's check if your computer can run it.

The most important thing is VRAM:

- VRAM is your graphics card's memory

- More VRAM = ability to run bigger AI models

- Most computers have between 4GB to 16GB VRAM

How to check your VRAM:

On Windows:

- Press Windows + R

- Type "dxdiag" and press Enter

- Click "Display" tab

- Look for "Display Memory"

On Mac:

- Click Apple menu

- Select "About This Mac"

- Click "More Info"

- Look under "Graphics/Displays"

On Linux:

- Open Terminal

- Run:

nvidia-smi(for NVIDIA GPUs) - Or:

lspci -v | grep -i vga(for general GPU info)

Which models can you run?

Here's a simple guide:

| Your VRAM | What You Can Run | What It Can Do |

|---|---|---|

| 4GB | Small models (1-3B) | Basic writing and questions |

| 6GB | Medium models (7B) | Good for most tasks |

| 8GB | Larger models (13B) | Better understanding |

| 16GB | Largest models (32B) | Best performance |

Start with smaller models:

- Try 7B models first - they work well for most people

- Test how they run on your computer

- Try larger models only if you need better results

Setting Up Your Local AI

1. Get Started

Download Jan from jan.ai (opens in a new tab) - it sets everything up for you.

2. Get an AI Model

You can get models two ways:

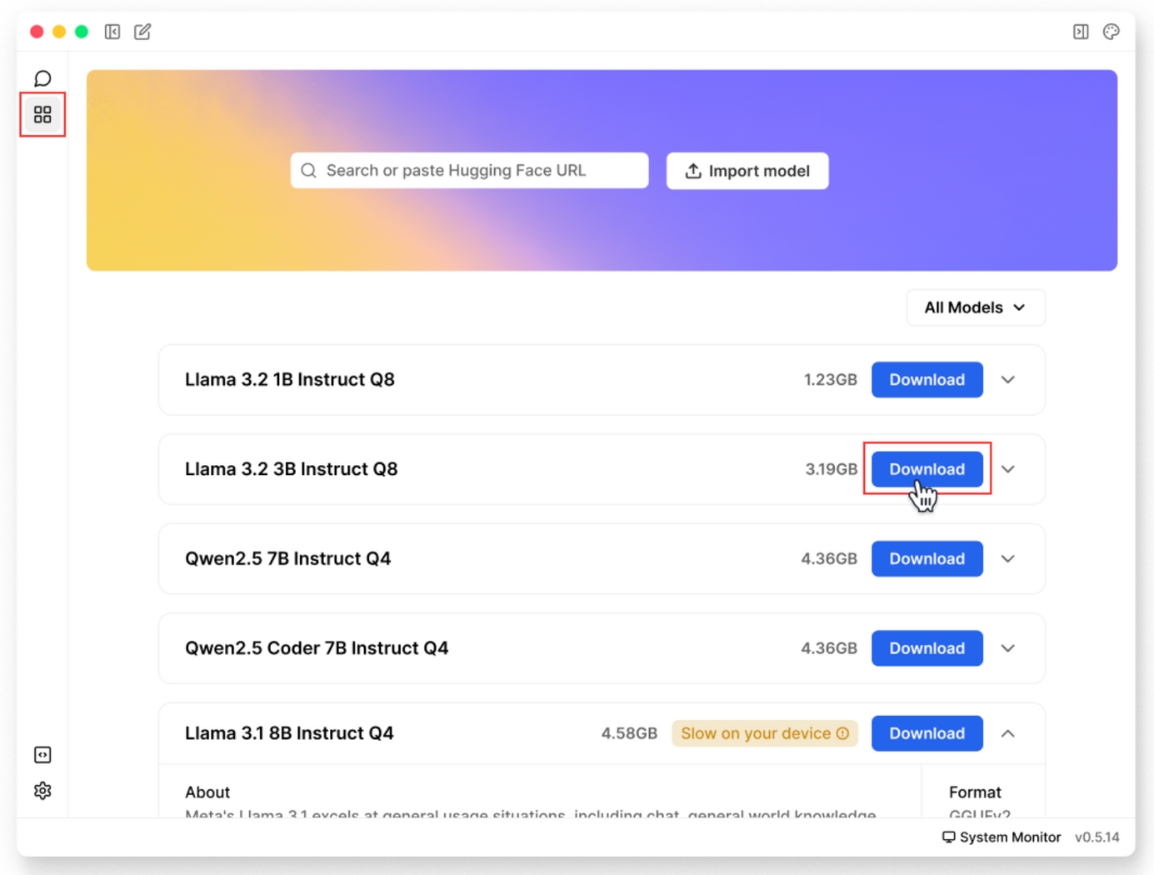

1. Use Jan Hub (Recommended):

- Click "Download Model" in Jan

- Pick a recommended model

- Choose one that fits your computer

Use Jan Hub to download AI models

Use Jan Hub to download AI models

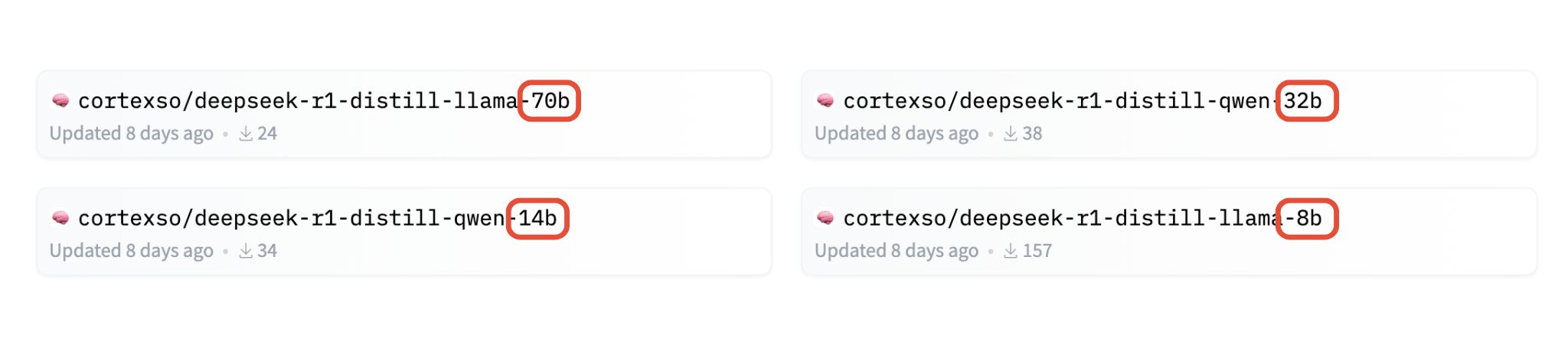

2. Use Hugging Face:

Important: Only GGUF models will work with Jan. Make sure to use models that have "GGUF" in their name.

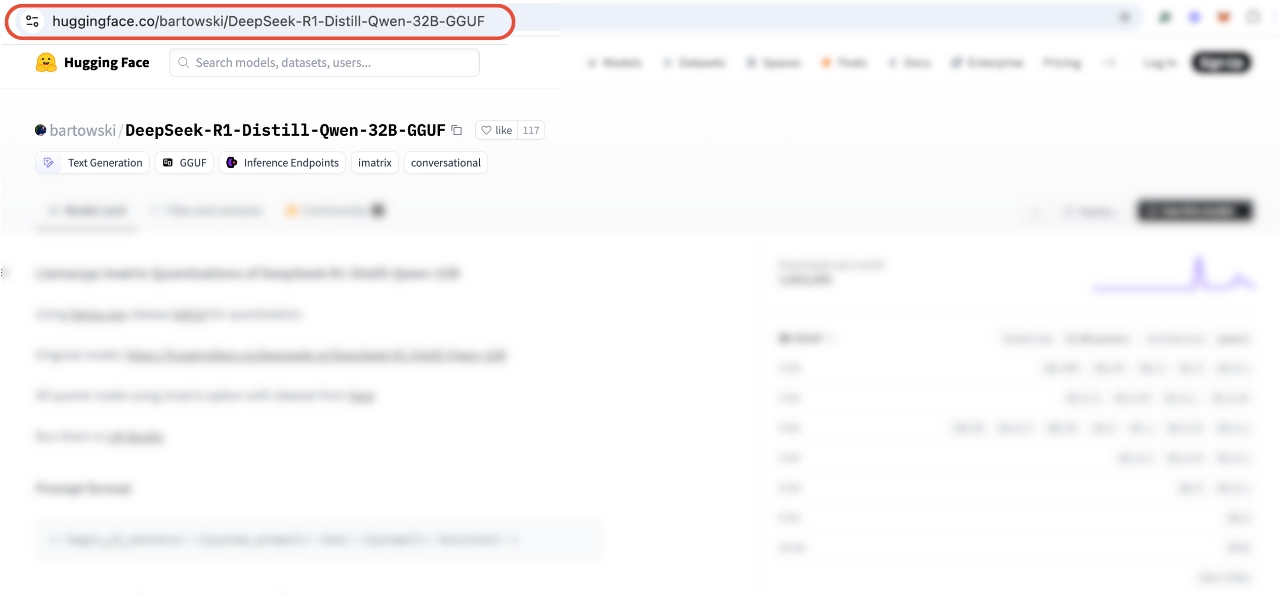

Step 1: Get the model link

Find and copy a GGUF model link from Hugging Face (opens in a new tab)

Look for models with "GGUF" in their name

Look for models with "GGUF" in their name

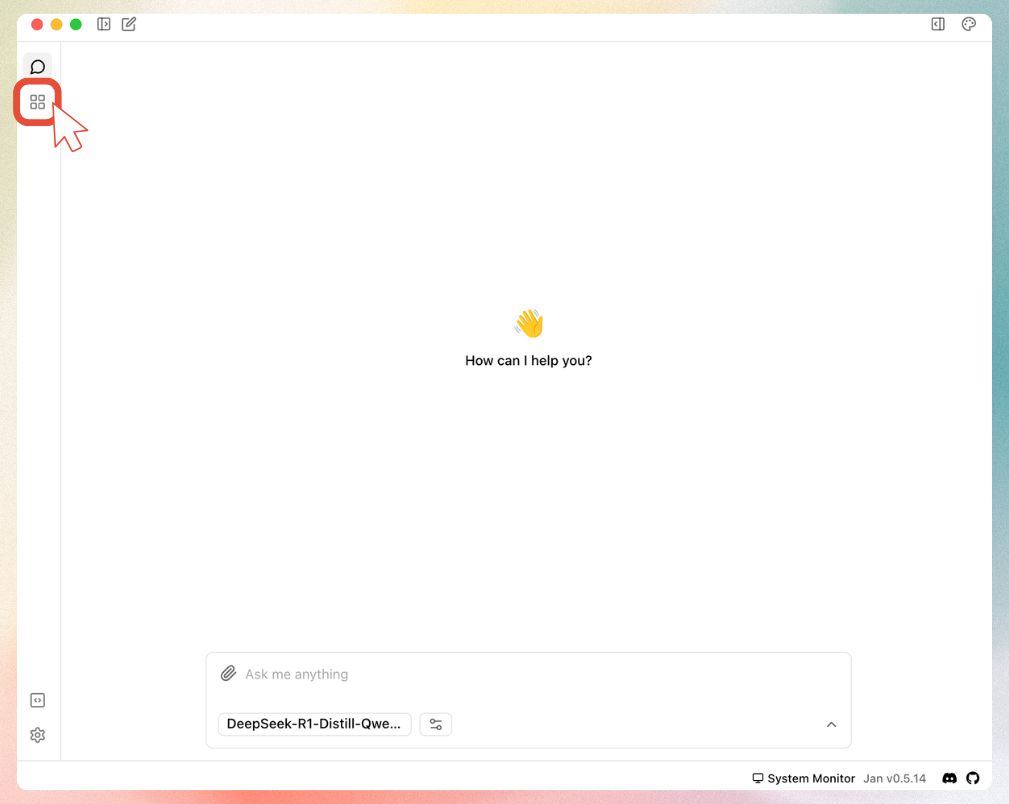

Step 2: Open Jan

Launch Jan and go to the Models tab

Navigate to the Models section in Jan

Navigate to the Models section in Jan

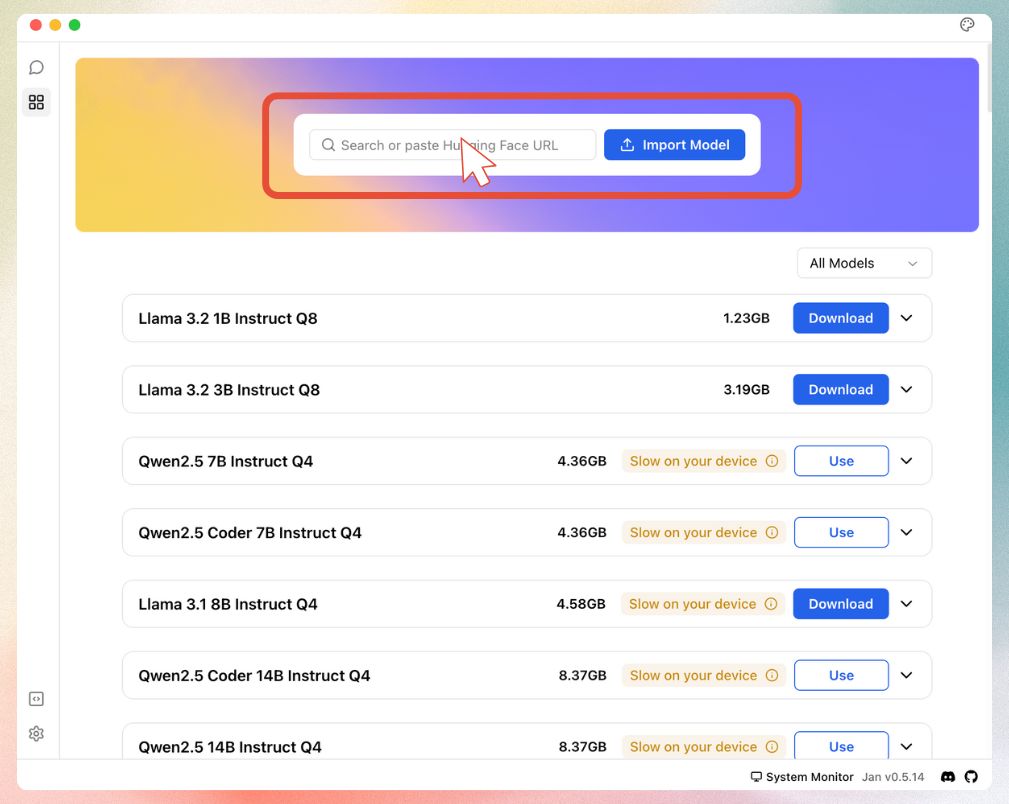

Step 3: Add the model

Paste your Hugging Face link into Jan

Paste your GGUF model link here

Paste your GGUF model link here

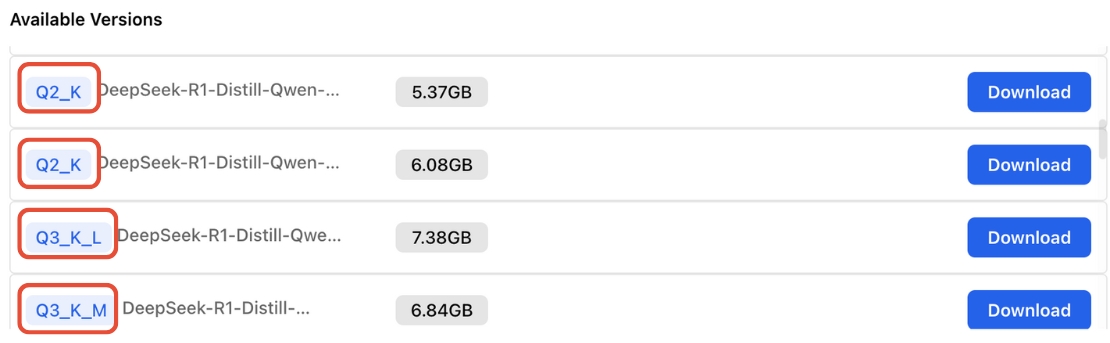

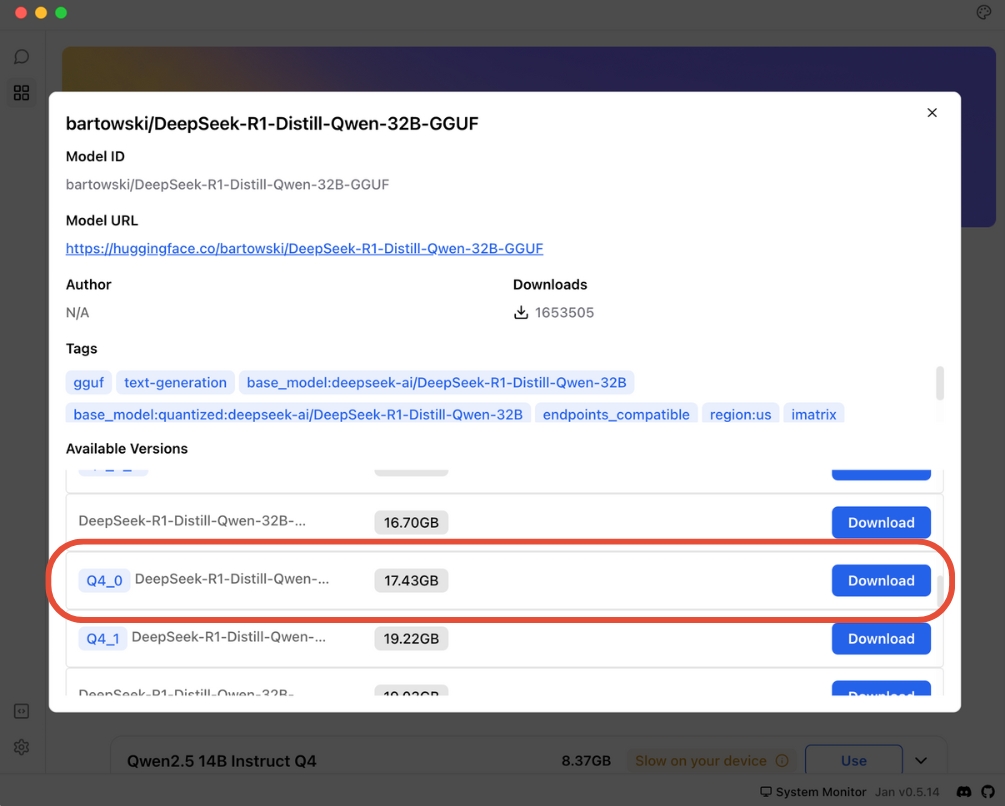

Step 4: Download

Select your quantization and start the download

Choose your preferred model size and download

Choose your preferred model size and download

Common Questions

"My computer doesn't have a graphics card - can I still use AI?" Yes! It will run slower but still work. Start with 7B models.

"Which model should I start with?" Try a 7B model first - it's the best balance of smart and fast.

"Will it slow down my computer?" Only while you're using the AI. Close other big programs for better speed.

Need help?

Having trouble? We're here to help! Join our Discord community (opens in a new tab) for support.